From Perception to Action

This post gives a high-level overview of the neuroscience of how the whole perception-action pathway works in humans. Building on top of the ideas from here, we explore the two-stream hypothesis as well as other peculiarities related to how humans use sensory information to navigate and act. For a more detailed approach I recommend this book by Richard Passingham.

The pathway from light entering the eye to the processing of visual information in the brain is a complex sequence involving several steps. Here's an overview:

- Light enters the eye: Light passes through the cornea, which bends the light rays, and then through the aqueous humour and the pupil into the lens. The lens focuses the light onto the retina, the light-sensitive layer at the back of the eye.

- Transduction: The retina contains two types of photoreceptors, rods and cones, which respond to light. Cones are responsible for colour vision and function best in bright light, while rods are more sensitive to low light and provide peripheral and night vision. The photoreceptors in the retina convert the light into electrical signals in a process called phototransduction.

- Signal processing in the retina: These electrical signals are sent to bipolar cells and then to ganglion cells. Horizontal and amacrine cells in the retina help integrate the information, providing some preliminary processing by laterally-inhibiting nearby cells so as to increase the sharpness and contrast of the resulting "image".

- Optic nerve: The axons of the ganglion cells bundle together to form the optic nerve, which carries the visual information to the brain.

- Optic chiasm: The optic nerves from both eyes meet at the optic chiasm, where the information from the nasal (inner) half of each retina crosses to the opposite side of the brain, allowing for binocular vision.

- Lateral geniculate nucleus (LGN): From the optic chiasm, the visual information travels to the LGN in the thalamus, where it is processed and relayed to the primary visual cortex.

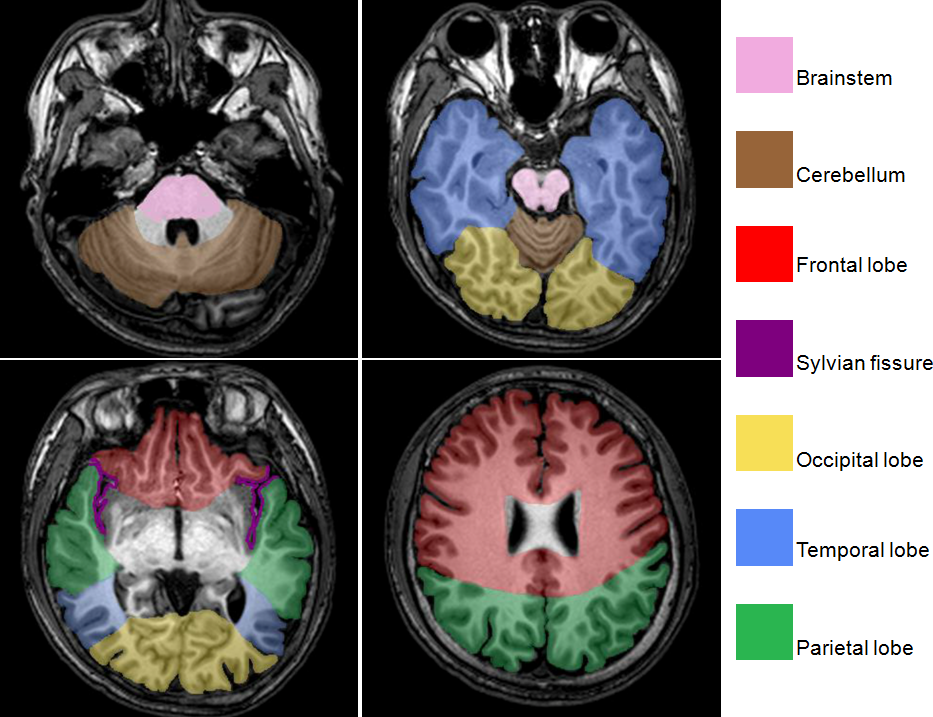

- Primary visual cortex (\(V_1\)): Located in the occipital lobe, \(V_1\) receives the visual information and begins the complex processing required to interpret it. The visual information is organized retinotopically, preserving the spatial organization of the retina.

- Higher visual areas: From \(V_1\), the information is sent to other visual areas such as \(V_2\), \(V_3\), \(V_4\), and \(V_5\), each of which processes different aspects of the visual scene like shape, colour, motion, and depth.

The currently dominating view of how step 8 works is the two-stream hypothesis, according to which after initial processing in the primary visual cortex \(V_1\), visual information is divided into two separate pathways or "streams": the dorsal one processes spatial information, particularly related to the location of objects and guiding movements relative to them, and the ventral one recognizes objects and identifies "what" something is, along with attributes like colour and shape.

Scanning

Step 8 is where things get fuzzy. Most of our daily navigation tasks require a combination of "what" and "where", so both streams are active, often in parallel. However, under controlled lab experiments, if a person is asked to focus on a simple task which requires only one type of information processing, individual isolated active regions in the brain can be identified. Most of our cognitive neuscience knowledge has been obtained in such a way. By scanning people while they go about their tasks we can see which regions of the brain are involved in which mental activities.

Positron emission tomography (PET) scans rely on radioactive tracers, biologically active molecules, like glucose or a drug, which are labeled with a radioactive isotope that emits positrons, such as fluorine-18. The radiotracer is then introduced into the body, typically via injection. Depending on the molecule and its chemical properties, different tissues in the body will take up the radiotracer at different rates. As the radioactive atoms in the tracer decay, they emit positrons, which in turn collide and annihilate with the nearby electrons. This releases two gamma rays that move in opposite directions. The PET scanner, which surrounds the patient, detects these gamma rays and pinpoints the location of the radiotracer in the body. Using many such signals, a 3D reconstruction of how the molecule reacts with the tissues can be constructed.

Magnetic resonance imaging (MRI), based on nuclear magnetic resonance, produces detailed images of the body's internal structures by exploiting the magnetic properties of hydrogen protons in water molecules. Protons, specifically the hydrogen nuclei abundant in our body due to the high water content, behave like tiny spinning magnets because of their intrinsic property called "spin." This spin gives them a magnetic moment. In the absence of an external magnetic field, the magnetic moments of these protons are oriented randomly. However, when placed inside the strong magnetic field of an MRI machine, most protons align in the same direction as the external magnetic field, a low-energy state. At this point an external radiofrequency (RF) pulse, essentially a short burst of electromagnetic radiation in the radiofrequency range, is applied. It temporarily excites the protons (particularly the hydrogen nuclei) within the tissues of the body, knocking them out of their equilibrium position or alignment with the static magnetic field. The magnetic moments of the protons are now tilted or flipped to a higher energy state by the RF pulse. After the RF pulse is turned off, the protons begin to relax back to their original, lower energy state, aligning back with the main magnetic field. As they do so, they emit RF signals, which are then detected by the MRI machine's coils. This emitted RF signal contains the information necessary to produce the MRI image. The contrasts in the image, which distinguish different tissues, arise from variations in the density of protons and the relaxation times of those tissues.

MRI provides a static structural view of brain matter. The most popular approach to capture not only structure, but activity as well, is to use functional MRI (fMRI). In essence, active neurons in the brain use more oxygen than inactive ones. As they consume oxygen from blood, the proportion of oxygenated to deoxygenated hemoglobin changes. This change affects the magnetic properties of blood. When placed in a magnetic field (as is the case in an MRI machine), deoxyhemoglobin creates small magnetic field variations around blood vessels. Oxyhemoglobin on the contrary does not distort the local magnetic field. fMRI relies on this difference, called the BOLD signal, to detect brainwave activity when focusing on a certain mental task.

Importantly, such scans like fMRI show meaningful increased brain activity only when compared to another ground state. A man who likes Pepsi shows increased brain activity in the ventromedial prefrontal cortex when drinking it, compared to when not drinking it. But similar activity in the same region is shown also if a person likes Coke and drinks Coke. It becomes evident that these scans, while useful, do not ultimately reveal cause.

Perception

Turns out that some regions in the brain are mapping stages where spatial layouts of the external world are associated almost one-to-one with spatial layouts in the brain. This is the case with retinotopy in the primary visual cortex \(V_1\). By carefully imposing magnetic pulses onto the visual cortex an subject can experience "flashes of light" precisely in those areas where the magnetic stimulation is applied on the scalp. Similarly, in the primary somatosensory cortex \(S_1\) different body parts have specific and organized representations. The size of each body part in the representation corresponds to the amount of sensory neurons and sensitivity, not the actual size of the body part.

Clearly the brain processes a lot of information from different modalities. To aggregate all of it, sensor fusion is needed. Within a single modality, integration is achieved by simply connecting the areas processing different signals, e.g. in vision to connect colour and shape one needs to have neural connections from the area that processes colour to that processing shape. Within different modalities integration is achieved by having "multimodal" areas like the parietal cortex and the prefrontal cortex. Through scanning one can observe activities there irrespective of whether the stimuli are visual, auditory, or tactile. In synesthetes the visual area \(V_4\) which processes colour can be cross-activated when also processing words or tastes, thus creating associations between colours and words and other sensory signals.

The other parts of the visual cortex, \(V_2\), \(V_3\) and so on, continue the processing of information in a hierarchical way. The earlier stages recognize patterns while the later ones integrate them. This setup allows for strong viewpoint, contrast, size, and illumination invariance when it comes to object recognition. In fact, early stage areas do respond differently if the object is rotated, but later stage areas do not. The lateral occipital complex (LO) is crucial for object recognition and responds only to only rather abstract high-level signals. Similar hierarchical processing happens when assigning semantic meaning to objects.

Very roughly speaking, the ventral stream follows the sequence \(V_1\), \(V_2\), \(V_4\), LO, and continues into the inferotemporal cortex (for complex objects like places and faces). The dorsal stream follows \(V_1\), \(V_2\), \(V_3\), \(V_5\)/MT (for motion), and continues to the posterior parietal area. Only the dorsal stream has direct connections with the areas in the frontal lobe that control movement.

A much less understood problem is that of perceptual awareness, or what is the relation between perception and awareness. Blindsight is a controversial revealing phenomenon in which individuals with damage to \(V_1\) can react to visual stimuli they report not consciously seeing. For instance, they might be able to "guess" the location or movement of an object without having a conscious experience of seeing it. From this, it seems that \(V_1\) is necessary for visual awareness, but it may not be sufficient.

In many experiments focusing on awareness two additional key areas are usually active - the anterior insula and the anterior cingulate cortex. They are interconnected and are associated with sensing the internal state of the body, emotional awareness, and reward anticipation. If a person is anesthetized, the activity in these regions drops, as does the activity in the thalamus, which relays sensory information into the brain. In general, these areas are good starting points to understand awareness and consciousness. But overall, it seems that the subjective feeling of "qualia" results from the activations of some of these areas. You cannot really feel the pain until the signal from the location where the painful event occurs reaches the brain.

Attention

Out visual feed is very rich in information. However, not all of it is useful given a particular task. We attend to only some parts of the visual information. Whether it is covert attention (fixed gaze, but attending to objects in the peripheral vision) or overt (focusing the gaze on an object of interest), two main areas are activated: the intraparietal sulcus and the frontal eye field in the prefrontal cortex. In fact, it's common to label areas like these, which are often interconnected and active during the same time as brain networks. The dorsal attention network, containing the two regions mentioned above, is one of these and its main role is to manage visuospatial attention. It is a "top-down" attention network. The task is set up in the prefrontal cortex and based on it, attention is used to enhance or inhibit useful/useless signal streams or create a template against which objects can be matched (in a matching task).

The other attention network is the ventral attention one which is "bottom up" and is responsible for detecting unexpected or behaviorally relevant, "salient" stimuli. Key regions here include the temporoparietal junction (TPJ) and the ventral frontal cortex. The latter of these is activated whenever we are making decisions regarding visual/auditory tasks like driving (visual input, manual output) and talking at the same time (auditory input, vocal output). In those cases the ventral prefrontal cortex becomes a bottleneck because computation for two different tasks has to happen sequentially, which takes time. When driving this increased reaction time can be problematic in certain situations. That is why multitasking is hard and people should spend their attention carefully.

Memory

We do not know that much about the low-level details of how memories are formed, represented, or retrieved. But we know that the hippocampus has a lot to do with it. Its fundamental role is to represent the location of the person in space. It is activated in two cases: when solving tasks like planning how to get from point A to point B, and when conjuring up events from the past. In fact, such past events are called episodic memory - personal memories of events from your life, essentially state transitions very roughly like \((s_t, a_t, r_t, t, s_{t+1}, a_{t+1}, r_{t+1}, t+1, ...)\).

When you experience something new or form a new memory, certain patterns of neural activity occur in the cortex. This neural activity is associated with the sensory, emotional, and cognitive aspects of that experience. Over time, the hippocampus and other related structures help consolidate these memories, stabilizing and organizing them for long-term storage. During this phase, memories become less reliant on the hippocampus and more associated with specific cortical areas. When you later try to recall or remember that experience, the brain attempts to recreate or "reinstate" the original patterns of neural activity in the cortex. This reactivation is what allows you to re-experience or remember the event. The accuracy and vividness of the memory might depend on how closely the reinstated pattern matches the original encoding pattern.

Semantic memory is different from episodic memory. It consists of the semantic knowledge about object properties that is accumulated throughout our lives. Semantic memory is managed by a different anatomical system than the one managing episodic memory and hence it is possible to have damage in one but not the other. People with amnesia are unable to recall past events but seem to be able to recall semantic knowledge learned many years ago.

Reasoning

Which regions are active when one is solving reasoning tasks? Non-verbal IQ tests like Raven's Matrices are the go-to benchmarks for general intelligence on which reasoning is tested. The main brain regions activated are the parietal and the dorsal prefrontal cortex. This is expected since the parietal cortex is specialized for the representation of spatial positions, transformations, distances, positions and relations.

It is unlikely that we are reasoning in language. Language is just for communication, not thought. Language is known to be clearly represented by strong activities in Broca's area and Wernicke's area and none of these are very active while solving logical problems, even if they're phrased in language. It is likely that inner speech is not that fundamental compared to the process of reasoning.

The Wechsler Adult Intelligence Scale test consists of verbal, visuospatial, and symbolic subtests. And yet it can be observed that the different subtests tend to give quite similar results. Even though the semantic system is activated in verbal tests like "similarity", where one has to judge how similar are the relations "painter-brush" and "writer-pen", the parietal cortex is still heavily involved. This suggests that similarity between different objects is judged in terms of equality, similar to distances and spatial relations, and is therefore handled in the parietal lobe. The results on all subtests seem to be similar because the parietal lobe is involved in all of them. In fact, its combination with the dorsal prefrontal cortex is quite strong and the activations are correlated. For that reason, these two regions are called the central executive network.

Deciding

This is where it gets particularly interesting. People are arguing over this "free will" problem as if our brain does not listen to us and does not obey our purposeful commands. Yet, in reality, it is the brain which makes decision, as an autonomous system navigating an external environment, and only then the conscious experience of committing to a decision (which is, in fact, already made) is felt. How does the brain go about deciding what to do next? The key area is the prefrontal cortex which stands at the top of the hierarchy of processing. It takes in sensory inputs and produces commands as outputs. This is the policy from RL.

It takes in signals from all sense modalities - vision, hearing, touch, visual space - from which it forms a representation of the current situation in the world outside. It's also connected with the amygdala and the hypothalamus, from which it is informed of the current needs like hunger, thirst, and temperature. Its outgoing connections are to the motor and premotor areas, hence it's in a position to influence actions. If the same situation repeats many times, the actions may become habitual. This is beneficial because habitual actions are faster and require less effort, but the downside is that if the situation changes suddenly, an erroneous habitual action may be executed.

The most important aspect of the prefrontal cortex is that it's involved in planning and imagining future outcomes. And if you can imagine future outcomes, you can choose between them and devise a sequence of actions that lead to that chosen outcome. But detailed imagination far into the future is hard and perhaps for that reason people have a time preference that favours rewards in the present compared to more rewards in the future. Moreover, imagining how an action might affect us is probably as hard as imagining how other people will be affected by our actions. Based on this, links to morality and empathy can be formed.

Checking

The brain in self-regulating. It observes whether the previous action has been executed successfully and if not, tries to rectify this. Similar to perceptual awareness, we can also experience awareness of our intentions. In a classic experiment published in 1983 Benjamin Libet showed that before a voluntary action occurs there is brain activity of which we are unaware. At least for simple tasks like moving a finger (as in the experiment) the results showed that a "readiness potential" signal appears about half a second before we become aware of our desire to act and about one second before the actual execution of the action. Hence, the brain decides to act and only later do we become aware of this desire. This is a problematic conclusion only for dualists.

It is useful to be aware of one's intent to do something earlier rather than later. Given that we are aware of what we intend to do, we can then compare it to what we actually do. When we are solving complicated tasks and we make an error, the prefrontal cortex is re-engaged on the next trial. Another brain region which is commonly activated when dealing with error detection and conflict monitoring is the anterior cingulate cortex. The simple Stroop task has yielded strong experimental results highlighting the increased attention on subsequent trials once the person realizes that they've made a mistake.

Acting

After the prefrontal cortex has selected an action the corresponding signals are sent to brain areas around the motor cortex where the signals are refined, decoded, and finally relayed down the spinal cord towards our limbs. When it comes to learning fine motor actions like e.g. playing a violin, the structure of interest is the cerebellum. It refines the motor actions initiated by other parts of the brain to ensure that the resulting movements are accurate, smooth, and coordinated.

As people learn manual skills, the sensory consequences of the movements become more predictable. There have been experiments where the subjects learn simple mechanical tasks well enough so that they can repeat various movements without references to any visual cues, after which the mechanics of the movement task are changed. This causes a "prediction error" when it comes to our expected sensory inputs. In turn the cerebellum gets fired up, prompting us to pay more attention on the next iteration. A similar process happens in the anterior striatum with unexpected rewards. In general, this is evidence of a more profound concept related to free energy minimization - the idea that the brain tries to minimize the "surprise" in terms of its sensory inputs.